A Critique of the IMDb Datasets

In our previous blog post, we learned how to navigate the IMDb Non-Commercial Datasets via the SQL interface of MonetDB. The core features and functionalities of the MonetDB software suite were covered, such as how to create a new database, create tables, load data into those table and perform simple queries.

Querying the data revealed some rather dubious organizational aspects of the datasets since even some relatively simple queries produced incomplete outputs, which necessitated the implementation of quick workarounds. But this does not solve the problem at large since prior knowledge of the problematic aspects of the database is required in order to obtain the desired results. Rather than learning to live with poor database design, let’s investigate the problems that have emerged and how to improve user experience.

Decomposition of nested lists

What complicates querying the unaltered datasets is the presence of nested lists in the form of comma-separated strings. These lists are of variable lengths containing multiple values in a single field.

This problematic feature is evident in the example below, which shows the first 20 records of the title_crew table:

+-----------+---------------------+-----------+

| tconst | directors | writers |

+===========+=====================+===========+

| tt0000001 | nm0005690 | null |

| tt0000002 | nm0721526 | null |

| tt0000003 | nm0721526 | nm0721526 |

| tt0000004 | nm0721526 | null |

| tt0000005 | nm0005690 | null |

| tt0000006 | nm0005690 | null |

| tt0000007 | nm0005690,nm0374658 | null |

| tt0000008 | nm0005690 | null |

| tt0000009 | nm0085156 | nm0085156 |

| tt0000010 | nm0525910 | null |

| tt0000011 | nm0804434 | null |

| tt0000012 | nm0525908,nm0525910 | null |

| tt0000013 | nm0525910 | null |

| tt0000014 | nm0525910 | null |

| tt0000015 | nm0721526 | nm0721526 |

| tt0000016 | nm0525910 | null |

| tt0000017 | nm1587194,nm0804434 | null |

| tt0000018 | nm0804434 | null |

| tt0000019 | nm0932055 | null |

| tt0000020 | nm0010291 | null |

+-----------+---------------------+-----------+

20 tuples

As a result, queries that request records on condition of a given value in the directors column will not pull records in which that value is embedded in a comma-separated string list, unless the query simultaneously extracts the value as a substring. The SPLITPART string function can be used for this purpose since it returns a substring within a string by splitting it on a user-given separator, which in this case is known to always be a comma. It also requires the position of the substring within the string as an input, which is usually not known a priori. In the previous blog post, we saw that using the SPLITPART string function is a useful workaround, but not a lasting solution to our problem given the need for prior knowledge of the value’s position as a substring in the string list.

So how do we fix this?

Crucially, no alterations were made to the structures of the datasets prior to or after being loaded into MonetDB. After witnessing the complications that have arisen as a result of poor data organization, a restructuring of the datasets is warranted in order to permit seamless querying and statistical analyses.

An effective solution would be to decompose the multi-valued strings on the comma separators, extract the values from the substrings and insert new records in which each field comprises a single value.

Following this approach, the first 20 records of the title_crew table should be:

+-----------+-----------+-----------+

| tconst | director | writer |

+===========+===========+===========+

| tt0000001 | nm0005690 | null |

| tt0000002 | nm0721526 | null |

| tt0000003 | nm0721526 | nm0721526 |

| tt0000004 | nm0721526 | null |

| tt0000005 | nm0005690 | null |

| tt0000006 | nm0005690 | null |

| tt0000007 | nm0005690 | null |

| tt0000007 | nm0374658 | null |

| tt0000008 | nm0005690 | null |

| tt0000009 | nm0085156 | nm0085156 |

| tt0000010 | nm0525910 | null |

| tt0000011 | nm0804434 | null |

| tt0000012 | nm0525908 | null |

| tt0000012 | nm0525910 | null |

| tt0000013 | nm0525910 | null |

| tt0000014 | nm0525910 | null |

| tt0000015 | nm0721526 | nm0721526 |

| tt0000016 | nm0525910 | null |

| tt0000017 | nm1587194 | null |

| tt0000017 | nm0804434 | null |

+-----------+-----------+-----------+

20 tuples

Even if some values in the tconst column are repeated, each record should remain unique and each field should be atomic (i.e. further decomposition is not possible).

But it doesn’t have to end here.

Given the presence of many empty fields in the rightmost column of the title_crew table, another advisable step would be to decompose it into two new tables as such:

+-----------+-----------+ +-----------+-----------+

| tconst | director | | tconst | writer |

+===========+===========+ +===========+===========+

| tt0000001 | nm0005690 | | tt0000003 | nm0721526 |

| tt0000002 | nm0721526 | | tt0000009 | nm0085156 |

| tt0000003 | nm0721526 | | tt0000015 | nm0721526 |

| tt0000004 | nm0721526 | | tt0000036 | nm0410331 |

| tt0000005 | nm0005690 | | tt0000076 | nm0410331 |

| tt0000006 | nm0005690 | | tt0000091 | nm0617588 |

| tt0000007 | nm0005690 | | tt0000108 | nm0410331 |

| tt0000007 | nm0374658 | | tt0000109 | nm0410331 |

| tt0000008 | nm0005690 | | tt0000110 | nm0410331 |

| tt0000009 | nm0085156 | | tt0000111 | nm0410331 |

| tt0000010 | nm0525910 | | tt0000112 | nm0410331 |

| tt0000011 | nm0804434 | | tt0000113 | nm0410331 |

| tt0000012 | nm0525908 | | tt0000132 | nm0617588 |

| tt0000012 | nm0525910 | | tt0000138 | nm0617588 |

| tt0000013 | nm0525910 | | tt0000165 | nm0005717 |

| tt0000014 | nm0525910 | | tt0000174 | nm0841389 |

| tt0000015 | nm0721526 | | tt0000181 | nm0674518 |

| tt0000016 | nm0525910 | | tt0000183 | nm0241416 |

| tt0000017 | nm1587194 | | tt0000189 | nm0005717 |

| tt0000017 | nm0804434 | | tt0000190 | nm0841389 |

+-----------+-----------+ +-----------+-----------+

As a result, each record in the newly decomposed tables should remain unique, without any empty fields. For further insight into the methodologies described in this section, look up database normalisation.

Primary-foreign key constraints

Restructuring the datasets as described above will also better suit the inclusion of primary and foreign key constraints, an important aspect of relational database management systems. Primary-foreign key relations were omitted from the database created for the previous blog post due to the lack of atomicity within the fields of columns intended as foreign keys. If each field in the directors column of the title_crew table becomes atomic after restructuring the relevant dataset, then the column can be defined as a foreign key (likewise for the writers column).

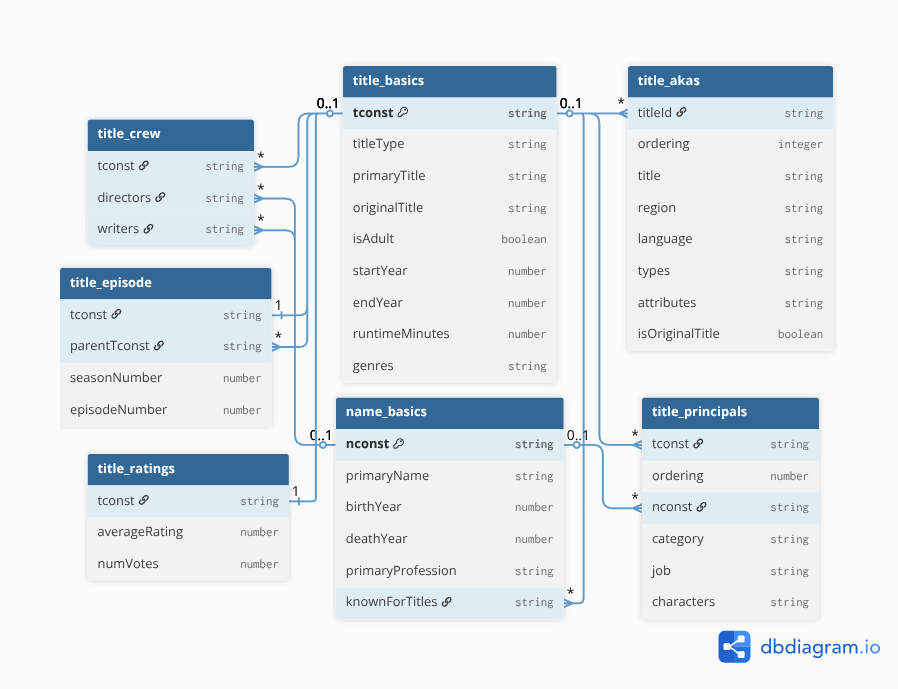

A foreign key in one table refers to the primary key of another table. In the case of the IMDb datasets, name_basics and title_basics are intended as the main tables that all other tables refer to; the nconst column of the name_basics table is intended as a primary key used to identify records of people working in the film industry, whereas the tconst column of the title_basics table is intended as a primary key for identifying individual movie records.

The following visual representation of the schema displays how the unaltered datasets should interrelate:

|

|---|

| Figure 1: IMDb Non-Commercial Datasets Schema |

A point of contention is the fact that the aforementioned primary keys have been stored as strings. Although string keys offer more flexibility given the wider range of eligible characters, more storage space is required to store a string than an integer of similar length. Furthermore, a string key is also more error prone given a greater susceptibility to misspellings compared to a numeric data type. Therefore, it is more sensible to store the primary keys as integers for better performance.

We hope this scrutiny of the various flaws with the IMDb datasets has given you a better grasp of how to organise and design a database for a more optimal user experience.

As always, keep an eye out on our blog and LinkedIn for more updates ;)